Confluent Certified Developer for Apache Kafka (CCDAK) certification will add uniqueness to your resume. In the current market, this certification is not a requirement by companies but definitely adds weight to your job application. By clearing its exam, one can obtain documented proof of having command over the Apache Kafka technology.

15 easy steps to pass the Apache Kafka CCDAK exam:

- Familiarize yourself with the exam requirements

- Familiarize with the exam pattern

- Read thoroughly the official Apache Kafka documentation

- Practice while watching online courses defined below

- LinkedIn Learning courses #0 – Same courses but for free

- Take Udemy course #1 – Learn Apache Kafka for Beginners v2

- Take Udemy course #2 – Kafka Connect Hands-on Learning

- Take Udemy course #3 – Kafka Streams for Data Processing

- Take Udemy course #4 – KSQL for Stream Processing – Hands-On!

- Take Udemy course #5 – Confluent Schema Registry & REST Proxy

- Take Udemy course #6 – Kafka Cluster Setup & Administration

- Take Udemy course #7 – Kafka Security (SSL SASL Kerberos ACL)

- Take Udemy course #8 – Kafka Monitoring & Operations

- Take Udemy course #9 – Confluent Certified Developer for Apache Kafka (CCDAK)

- Create your own project and apply all the knowledge

Now, let’s get into details!

[wpsm_toplist]

In order to facilitate those interested in pursuing this certification, this extensive guide has been compiled to give proper direction to their efforts. For the benefit of a wider audience, a brief introduction of Apache Kafka would be given first. This would be followed by details of exam requirements and patterns. Finally, all the resources needed to prepare for the exams would be introduced in order to completely map the entire preparation process.

What is Apache Kafka

Developed by LinkedIn in 2012, Apache Kafka is an open-source distributed data streaming platform for real-time processing. The reason behind its inception was to simplify the interactions between source and target systems by providing a centralized hub for data pipelines. These pipelines can serve communication lines for data and can also be used to take reactionary or transformative action in response to the data streams.

Apache Kafka operates as a cluster of servers that may be located across multiple locations. It is important to note that data here is referred to as “records” which is grouped into categories to form “topics”. This forms the foundation of the 3 main functionalities of Apache Kafka:

[wpsm_list type=”arrow”]

- To publish and subscribe to the stream of records

- To store these streams securely

- To process these streams in real-time.

[/wpsm_list]

The aforementioned functionalities of Apache Kafka are achieved through its 4 core APIs. The Producer API allows an application to publish one or more streams of records to a particular topic or set of topics. Conversely, the Consumer API allows an application to subscribe to a particular topic or set of topics for processing the stream of records produced by them. The Streams API serves as the stream processing unit and, consequently, processes input streams from single or multiple topics to produce output streams for one or multiple topics. Finally, the Connector API allows establishing and executing producers and consumers which are connected to existing applications and data systems.

[wpsm_video]https://www.youtube.com/watch?v=06iRM1Ghr1k[/wpsm_video]

Exam Requirements

The information discussed above is not enough to clear the CCDAK exam. Broadly speaking, there are 2 sets of knowledge necessary for developing the required proficiency level.

The first set is the Product Knowledge of the related Confluent products. It is expected that the candidate appearing for the exam has at least worked for 6-12 months with Confluent products. The nature of the exam is such that hands-on experience with Kafka Producer, Kafka Consumer, Kafka Streams, Kafka Connect, and KSQL queries is imperative for success. Furthermore, conceptual knowledge of application building and networking between Confluent products is expected as well.

The second set is the generalized IT Knowledge. Familiarity with relevant programming languages such as Python (how to become a python developer article) and Java (checkout whether Udemy is good for learning Java) is needed along with in-depth knowledge of at least one of them. Other important areas of knowledge include an understanding of networking, knowledge of distributed systems, stream processing, and familiarity with Linux/Unix.

Exam Pattern

The exam itself is broken down into 3 domains. Application Design is the largest portion of the exam and roughly contributes to 40% of the questions whereas Development and Deployment/Testing/Monitoring are given a weight of 30% each.

The nature of questions can be divided into 3 types as well:

[wpsm_list type=”arrow”]

- Multiple-choice questions expect the candidate to select a single correct option.

- Multiple-response questions can have more than one correct answer.

- Sample directions are the third type of questions that expects candidates to choose the best possible answer on the basis of the given information.

[/wpsm_list]

Official Preparation Documentation

The official documentation for Apache Kafka is available online on the official website. It covers the syllabus for the CCDAK exam in detail. That is why it is recommended as an integral part of the preparation material. Furthermore, it has been revised multiple times to ensure that it is up to date and has all the relevant information to meet the current criteria for Apache Kafka related operations.

The current version of the documentation is 2.4. It has been broken down in 9 sections, each focusing on a particular topic pertinent to the CCDAK exam. Each section is further divided into sub-sections so that navigation is easier and problematic concepts can be revisited later for further study. Throughout the documentation, relevant code syntax has been provided so that one can obtain hands-on experience and familiarize themselves with implementing it practically.

Official documentation

https://kafka.apache.org/documentation/

Kafka tutorials

https://kafka-tutorials.confluent.io/

9 Best Apache Kafka Online Courses

In the next section below, you’ll find TOP 9 BEST Apache Kafka Online Courses, which will help you become a MASTER in using Apache Kafka. You will also be ready to pass the CCDAK exam in your first attempt (of course if you will study responsibly).

The majority of these Kafka courses I bought myself a while ago, and so I can guarantee they are of the highest quality possible. Plus, they don’t cost tons, so if you really want to nail the technology, I recommend learning in the following order.

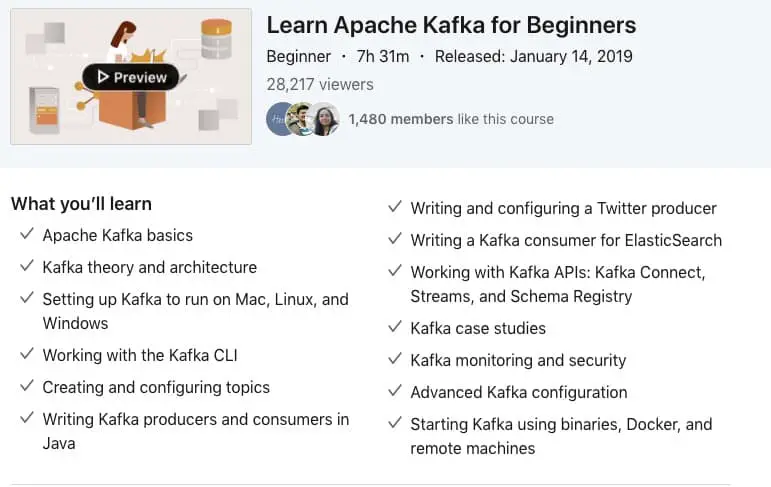

LinkedIn Learning #0 — Same courses but for free

This is a starting position for you when it comes to preparing for the Apache Kafka CCDAK exam. It would be stupid to assume that you don’t know either Java or Scala programming language already as you want to prepare for the CCDAK exam, however, my best recommendation would be for you to take some of the courses on LinkedIn Learning to refresh your programming skills.

You can get a risk-free 30 days free trial, during which you can do all the repetition, be it Java, Scala, or even Kafka courses from the same author, Stephane Maarek, as you’ll see in the below online courses.

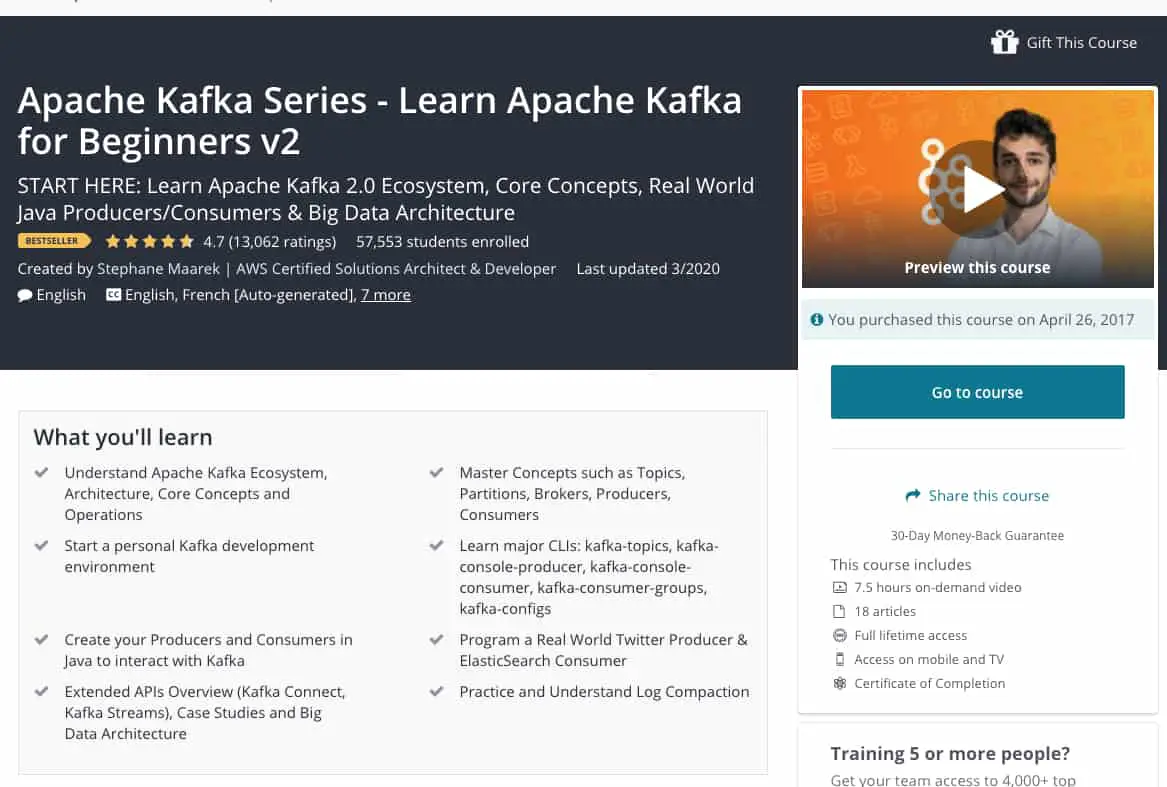

Udemy course #1 – Learn Apache Kafka for Beginners v2

In the Apache Kafka series available on Udemy, “Learn Apache Kafka for Beginners” is the entry point in your journey to ready yourself for the CCDAK exam. There are a few pre-requisites in place which are necessary for maximizing the benefit of this course. One must have a reasonable grip on Java programming and its practical usage. Furthermore, it is also good to have an understanding of using the Linux command line as well.

This course establishes the core concepts about the Apache Kafka ecosystem and teaches how to make a personal development environment. Furthermore, it gives an extended overview of the 4 core APIs and introduces major CLIs needed to perform interactions. It concludes with the practice of log compaction and also explains the programming of a real-world Producer and Consumer.

Duration: 7.5 hours

Available here: link to the course on Udemy

Udemy course #2 – Kafka Connect Hands-on Learning

The second course in the series focuses on Kafka Connect which is a scalable tool for streaming data between Apache Kafka and external systems. In addition to the knowledge acquired from the basic Kafka course, one must also know about Docker.

It deals with different operations of Kafka Connect. You learn the architecture and fundamentals of Kafka Connect that enable you to write your own Kafka Connector. Knowledge of configuration and execution of both Source and Sink Connectors is provided in this course. The method of deploying Kafka Connector in standalone and distributed mode is explained here as well. One also learns to launch a Kafka Connect cluster using Docker.

Duration: 4.5 hours

Available here: link to the course on Udemy

Udemy course #3 – Kafka Streams for Data Processing

As the name suggests, this particular course targets Kafka Streams which can be viewed as a stream processing unit. Again prior knowledge of Apache Kafka is needed to understand the course along with an understanding of Java 8 programming language.

The development and scaling of Kafka Stream applications is a key takeaway from this course. As a result, this equips you to write and package your own application. More specifically, a configuration of Kafka Streams for using Exactly Once Semantics, making use of high-level DSL while programming Kafka Streams and writing tests for Kafka Streams topology is among the important learning outcomes.

Duration: 5 hours

Available here: link to the course on Udemy

Udemy course #4 – KSQL for Stream Processing – Hands On!

The “KSQL for Stream Processing – Hands On!” course revolves around Confluent’s stream processing framework, KSQL. The course itself does not have any compulsory pre-requisite information apart from the basics of Apache Kafka. However, understanding of Kafka Streams would expedite the learning process and improve the experience of the course.

This course covers all the basics of KSQL so lack of familiarity with it would not cause any issues. The creation of streams and tables along with the generation of data with KSQL-DATAGEN would be covered. A wide array of data formats such as Avro, CSV, etc. would be used for developing a deep understanding of KSQL. Different operations of KSQL, including advanced options like Joins, Windowing, etc., are taught in this course. To practically implement all the acquired knowledge, the creation of a taxi booking application would also be done.

Duration: 3.5 hours

Available here: link to the course on Udemy

Udemy course #5 – Confluent Schema Registry & REST Proxy

In this particular course, 3 important Confluent products are taught which gives greater insight into the world of Apache Kafka. Apache Avro is an efficient data serialization mechanism, Confluent Schema Registry is the go-to method for storing Avro schema for the relevant topics and Confluent REST Proxy is a great way for communication of Avro data without using Java.

The course starts off with simple Avro schemas and gradually moves towards more complex ones. It also introduces how a Java Producer and Consumer can make use of Avro data and Schema Registry. Then it transitions to different methods of Schema evolution. Maintaining the link between Java and Avro, the course also teaches how to create, read and write Avro objects in Java. Utilization of REST Proxy using a REST client is another major learning outcome.

Duration: 4.5 hours

Available here: link to the course on Udemy

Udemy course #6 – Kafka Cluster Setup & Administration

As established earlier, a Kafka cluster is a group of servers that may be at multiple locations. This course focuses on setting up and the administration of Kafka clusters. That is why, apart from the usual pre-requisites that we have observed so far, knowledge of servers and AWS is also needed for this particular course.

This course introduces the concept of Zookeeper cluster and its link to Kafka. It also teaches how to set up different administrative tools such as Kafka manager using Docker. There is great emphasis on Kafka clusters throughout the course. Their setup and configuration are explained along with establishing a link to Shutdown and Recover Kafka Brokers. Factors such as optimization on a given workload and maintenance of the Kafka Cluster are all important aspects within the confines of this course.

Duration: 4 hours

Available here: link to the course on Udemy

Udemy course #7 – Kafka Security (SSL SASL Kerberos ACL)

Kafka security is an important part of Apache Kafka to ensure the protection of data streams. It is broken down into 3 parts: Encryption (SSL), authentication (SSL and SASL) and authorization (ACL). This course takes things from scratch in terms of security so no prior knowledge of SSL, SASL, and Kerberos is needed. However, as we have come to expect, knowledge of Kafka and Linux is necessary.

It covers the setup and usage of all 3 primary aspects i.e. SSL, SASL, and ACL. Furthermore, it also provides information on how to configure the Kafka Clients so that it may be integrated with the security installed on the system. It addresses the configuration of Zookeeper security in order to complete the discussion of the relevant topics.

Duration: 4 hours

Available here: link to the course on Udemy

Udemy course #8 – Kafka Monitoring & Operations

The course focuses on 3 primary pillars for Apache Kafka: Administration, monitoring and operations. An understanding of Kafka and Linux is naturally required for this course.

This course imparts knowledge regarding the setup of monitoring and administration mechanisms. The monitoring setup is done using Grafana and Prometheus while for administration, tools such as Kafka manager are used. Different operations regarding Kafka clusters are discussed. This includes setup of a Multi-Broker Kafka Clusters, rebalancing partitions, the addition of Broker, removal of Broker, service/replacement of a Broker and upgrade of the Cluster itself.

Duration: 5 hours

Available here: link to the course on Udemy

Udemy course #9 – Confluent Certified Developer for Apache Kafka (CCDAK)

The final piece of the puzzle, this course is basically a set of 150 CCDAK exam questions. After covering all the course material, this course should provide ample practice before the exam itself. The course comprises of 3 practice tests, each with 50 questions. In case of any wrong attempts, proper explanations are provided to clarify the necessary concepts so that one can understand how to arrive at the correct answer.

Available here: link to the course on Udemy

Conclusion

It is expected that through this guide, any aspiring student can achieve their goal of attaining CCDAK certification. The preparation material highlighted is all that you would need to develop a comprehensive understanding of the necessary Apache Kafka concepts. However, for maximizing the utility of these resources, one must have the passion and drive to learn and excel in the domain of Apache Kafka. If enough time is dedicated to studying the relevant topics with keen interest, there is a high likelihood that not only the examinee would clear the test but also avoid the undue hassle and expenditure of any further re-attempts!